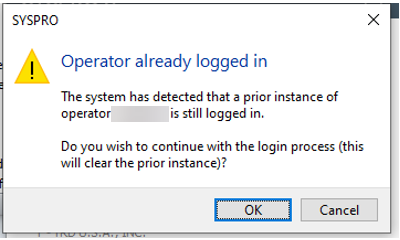

SYSPRO Error Message: Operator Already Logged In

A warning message that most SYSPRO users will commonly see is the “Operator already logged in” prompt. Under normal circumstances, this message means exactly what it says! The operator is already signed in.

However, the error message can appear for other reasons that may be puzzling to the user. It is most typically associated with users not exiting SYSPRO through normal means (crashes, forced computer shutdown, etc). It is good for both the ERP administrator as well as the end-users to know what this error means and why it may appear despite the user not being signed in.

What does this “SYSPRO Error Message – Operator Already Logged In” message mean?

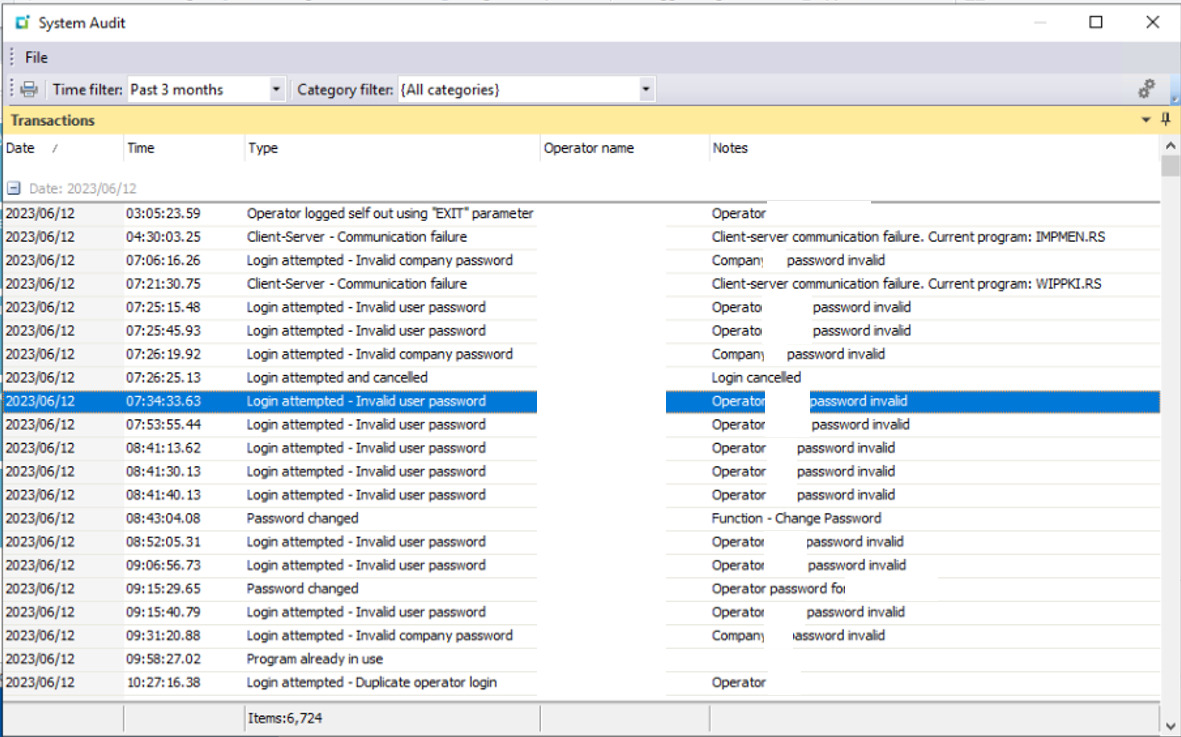

SYSPRO’s database has a table called AdmOperator. Inside this table there is a column used to indicate whether a SYSPRO operator is currently signed in. The column value is set to “Y” when a user signs in and is cleared when SYSPRO is closed out normally by the user. The “Y” value can linger in the database if the user fails to close out of SYSPRO “gracefully”.

In that case, the “Operator already logged in” message will appear. The user has the option to proceed which will clear any lingering operator entries. If the user is in fact already signed in, any previous session is terminated by the system.

What causes this “Operator already logged in” message?

Besides the intended circumstance of the operator already being signed in on another computer, the message also appears if a user fails to close out of SYSPRO “gracefully”. Examples of this could include:

- The user shuts down their PC while SYSPRO was running.

- The user closes SYSPRO forcefully using the Windows Task Manager. This is common in the event of SYSPRO freezing or crashing.

- Network failures between SYSPRO and the app server causing communication errors.

- If the user closes their web browser when using SYSPRO Avanti without using the logout functionality in the application.

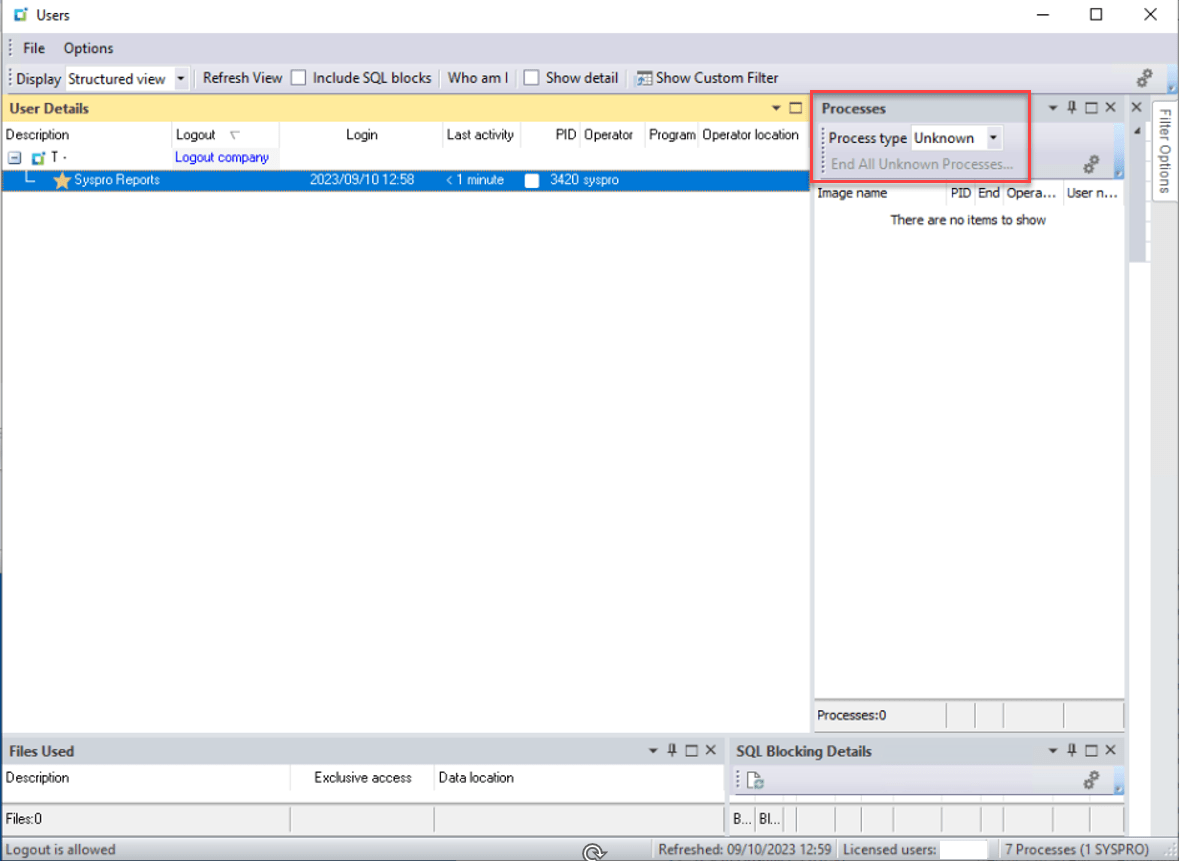

Some of these events may also result in “Unknown Processes” lingering in SYSPRO. These will have to be closed out using administrative tools in SYSPRO. To learn about these processes, see our article on Handling Unknown Processes in SYSPRO.

So, what should you do about this message?

Clicking “OK” to proceed is all you need to do! If the warning appears because the user is in fact already signed in, that previous session will simply be terminated. If it appeared for any of the other reasons outlined above, the database fields are cleaned up from any incorrect flags and reset to their intended status. It is good to inform users that this error means no harm and that they can safely proceed if they do not believe that their operator is signed in anywhere else.