ChatGPT Security? Tell Me About Your Motherboard

ChatGPT security concerns reveal that business owners are hesitant to let AI replace humans.

In November 2022, OpenAI introduced ChatGPT, an artificially intelligent, natural language chatbot. ChatGPT interacts with its users in uncannily humanistic and intelligent ways.

ChatGPT (Conversational Generative Pre-trained Transformer) is a new type of artificial intelligence technology that is being developed to improve the way people interact with machines. While it is intended to provide faster and more intuitive responses to queries, it also carries potential security risks, especially for business owners.

The main concern is that, due to its complex nature, it could result in the loss of private data at great cost to companies and their employees. Furthermore, the technology could lead to a lack of control over data and give hackers the power to manipulate user behavior. This could be particularly damaging to those who rely on personal data to make decisions, such as financial services.

Additionally, ChatGPT could potentially cause unintended consequences, such as decreased privacy, as well as a lack of transparency. Therefore, it is essential to understand the implications of this technology before it is put into use.

The capabilities of ChatGPT and other Artificially Intelligent (AI) platforms are truly astounding. Users can ask ChatGPT questions and expect meaningful, accurate answers. However, these advancements in AI and chatbot technology come with their own set of compliance, privacy, and cybersecurity concerns.

For instance, as these AI platforms become more sophisticated, they may begin to store more personal data and analyze user behavior. This could lead to potential privacy violations and other security risks:

- AI-powered chatbots are particularly vulnerable to malicious attacks, as hackers may attempt to exploit vulnerabilities in AI platforms in order to gain access to sensitive information, manipulate data, or disrupt operations.

- Additionally, AI-powered chatbots may be vulnerable to social engineering attacks, wherein hackers may use techniques such as phishing, impersonation, and disinformation to gain access to systems or manipulate people.

- Furthermore, AI-powered chatbots may be vulnerable to data poisoning attacks, wherein hackers may input malicious data into AI systems in order to corrupt their output.

- Finally, AI-powered chatbots may be vulnerable to adversarial attacks, wherein hackers may use sophisticated methods to fool the AI system into producing incorrect results.

These attacks can be used to gain access to valuable data, disrupt operations, or even cause physical harm. As such, it is important for businesses to take the necessary steps to protect their AI platforms from potential cyber threats.

The question and answer exchange feature of a chat-based AI tool allows users to exchange information and collect personal data, making it easier to target specific audiences with tailored content.

AI security issues surface greater challenges in company data management.

Sophisticated chatbots provide an efficient way to generate content quickly, allowing users to quickly respond to customer requests or create high-quality content. As AI systems collect data, threat actors can scavenge for personal data, such as payment information or an email address. Something immediately helpful in customer relationship management soon becomes a data management nightmare.

Aside from the entertainment and educational capabilities of this new AI technology, ChatGPT and its other rival AI platforms have the potential to revolutionize the internet and working atmospheres.

In the technology realm, IT workers can use ChatGPT to enhance their development by asking the tool to quickly write or revise code. Considering the capabilities of AI platforms, it’s no wonder why companies are investing in and implementing AI technology.

However, like many other technological advances in history, AI platforms have potential privacy and cybersecurity risks. Recently, Italy, Spain, and other European countries have raised concerns about the potential privacy violations that could arise from using ChatGPT, an artificial intelligence (AI) platform. As a result, these countries have sought to introduce new regulations to ensure that ChatGPT respects the privacy of its users.

In particular, these regulations would require the platform to limit the collection, use, and disclosure of users’ personal data, as well as to ensure that users are able to access, modify, or delete the personal data they have provided to the platform. The regulations would require ChatGPT to take appropriate steps to ensure that any personal data collected is adequately protected from unauthorized access, use, or disclosure. This includes implementing appropriate technical and organizational measures such as encryption, pseudonymization, and secure storage systems.

ChatGPT would also be required to provide users with clear and detailed information about how their personal data is being used, such as the purposes for which it is being collected and processed, the categories of data being collected, how long it will be stored, and who it will be shared with. Furthermore, ChatGPT would need to ensure that users are aware of their rights in relation to their personal data, including their right to access and to request rectification or deletion of their data.

Many countries have banned ChatGPT. Under the Biden administration, the United States will roll out a comprehensive national security strategy to address the growing threat of hacking and malicious use of artificial intelligence (AI) platforms. This strategy will involve the coordination of multiple federal departments and agencies, including the Department of Defense, the Department of Homeland Security, the Department of Justice, and the Office of the Director of National Intelligence. It will also require close coordination with international partners and allies, as well as the private sector and civil society organizations to ensure that the strategy is effective and comprehensive in scope.

The strategy will include a focus on protecting critical infrastructure, strengthening deterrence and detection capabilities, improving information sharing and collaboration, and developing new technologies to protect against malicious cyber threats and malicious AI use. The strategy will also involve enhancing international cooperation and engagement to counter malicious cyber activities, as well as increasing public and private investments in cyber security research and development.

The Biden administration will also be seeking to build public-private partnerships to improve the security of both public and private sector networks and systems. AI platforms are increasingly becoming popular due to their innovative and highly capable nature. However, these platforms are not without their risks and need to be assessed by multiple parties.

Cybercriminals are constantly looking for ways to take advantage of these platforms, targeting them in order to steal confidential information, generate malicious software, or gain access to data systems. These types of cyber attacks can have serious implications for the security of the platform and its users, resulting in the loss of valuable data, financial information, and sensitive personal information. Therefore, it is essential that organizations take the necessary steps to protect their AI platforms against these types of malicious attacks. This includes implementing robust security measures and regularly monitoring the platform for any suspicious activities. Additionally, it is important to stay up to date with the latest cybersecurity trends and technologies in order to ensure that the AI platform remains secure and protected.

Although OpenAI has programmed ChatGPT with the appropriate rules to prevent abuse, hackers have already figured out how to “jailbreak” the platform. In as little as a minute, hackers can generate malicious code for criminal intent. Prior to utilizing ChatGPT, their efforts may have taken days or even weeks.

AI-generated malware and cybersecurity attacks have already occurred. For example, hackers recently used ChatGPT to generate apps that successfully hijacked Facebook users’ accounts.

Preventing cybersecurity attacks and data breaches are of utmost importance for companies that desire to protect their sensitive data and minimize their costs, and now that hackers are using AI platforms to further their criminal activities, it is imperative, now more than ever, for companies to seek the best security solutions.

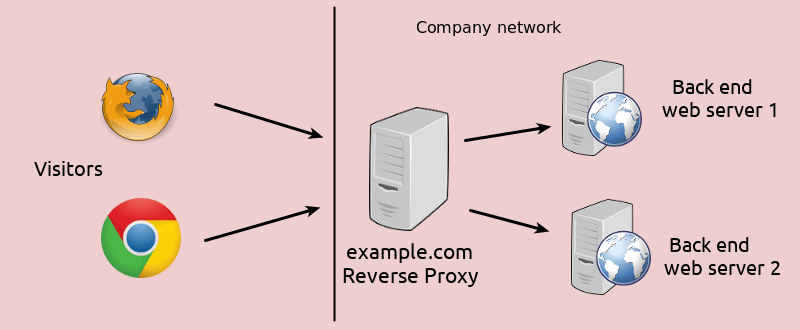

EstesGroup offers EstesCloud services to protect companies’ private data and systems from cybercriminals who may use new AI platforms for malicious intent. EstesCloud protects companies in a changing society in which AI technology is accelerating and enhancing hackers’ criminal activities. ChatGPT security is included in the private cloud and hybrid cloud infrastructures that we create for our clients.

ChatGPT security isn’t an issue when your powerful, highly capable AI and ERP tools are protected in a reputable data center. EstesGroup is ready to protect companies from hackers who use ChatGPT and other AI platforms to attempt to breach their data systems. The new AI technology will inevitably advance in the future, and as companies embrace and implement AI platforms, security solutions, like EstesCloud, will be necessary to safeguard private data and protect data systems.

EstesGroup realizes that innovation requires responsibility and security solutions, and the Estes’ team of highly skilled and dedicated professionals are ready to assist companies that seek the best cloud protection. Only time will tell how AI platforms will transform company atmospheres, but companies can rest assured that EstesGroup is ready for an artificially intelligent future.