Troubleshooting SYSPRO: General SYSPRO 8 Instabilities

Is Troubleshooting SYSPRO Troubling You?

General issues are to be expected when utilizing a client-server-based piece of ERP software the size of SYSPRO. Client machines not responding, crashing, or getting disconnected is not unusual. From an administrative perspective, it is important to know the various factors that can affect these general system stability issues. In this article, we have outlined some of the factors that can cause these problems as well as some tools to know, utilize, and review when diagnosing general SYSPRO instability.

Antivirus and Firewall Exceptions

One of the general offenders causing SYSPRO issues are antivirus software. SYSPRO opens the required ports in the Windows Firewall when it is installed, however, most sites utilize additional antivirus and/or firewall software that will have to be configured separately. SYSPRO 8 uses port 30250 and 3702 by default but be sure to verify the ports used in your own environment.

If you see installation errors relating to SYSPRO clients or client-side reporting tools, you can try disabling local antivirus during the installation. Make sure to turn it back on after the installation has been completed.

SQL Health Dashboard

The SQL Health Dashboard (IMPDBS) is a built-in SYSPRO tool that analyzes your SQL Server and reports back with any potential issues. When you open the program, it will scan your SYSPRO databases one by one and provide you with an overview of potential issues. Any issues reported here will likely relate to system-wide issues more so than issues pertaining to specific client machines. Seeing this program report “green” across the board is vital for ensuring that your SQL Server is running at its best.

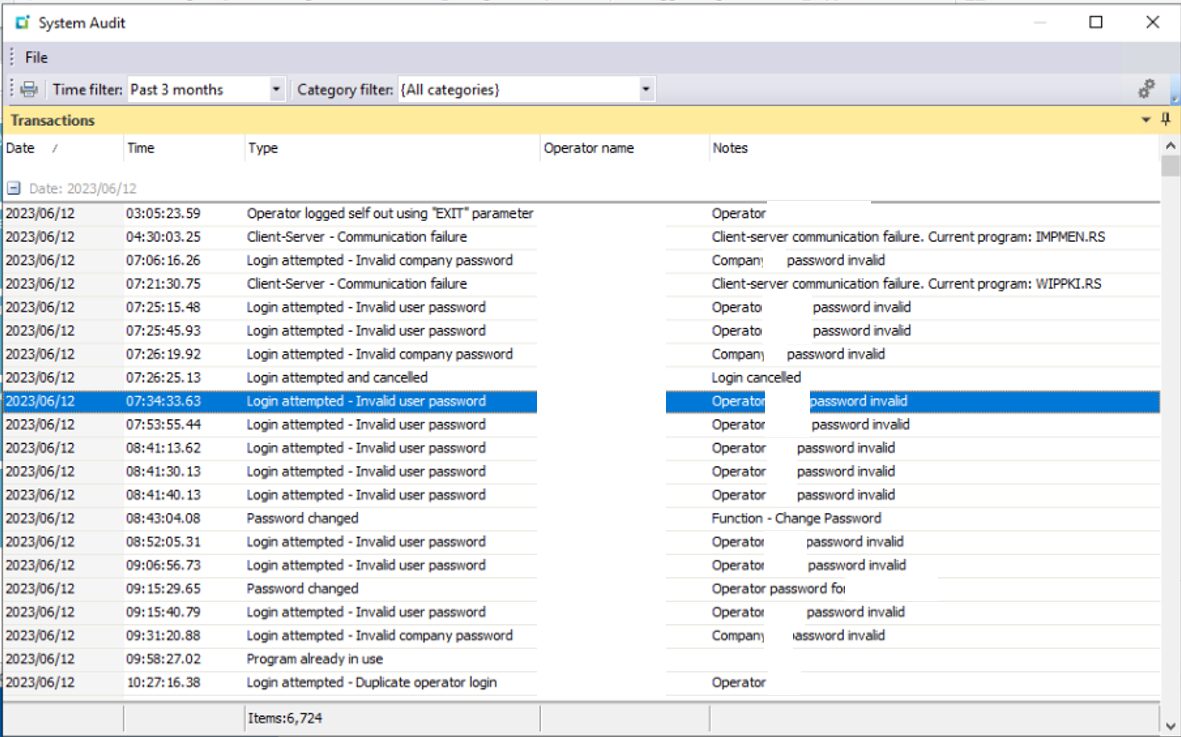

System-Audit Query

System-Audit Query (IMPJNS) is another built-in tool that you can use to diagnose problems in SYSPRO. This one is particularly helpful to determine if disconnects or errors are unique to a specific operator and/or program. You can use the program to filter for client-server disconnects and determine who is encountering them, when they are encountering them, and where they are encountering them. This program can’t fix the problem, but it can tell you if one exists!

SYSPRO Troubleshooting: SYSPRO System Audit

Client Folder Permissions

By default, the client-side installation of SYSPRO is found at “C:\SYSPROClient”. This folder contains the local SYSPRO install, user settings, and program files. Depending on default settings for a domain user, it is possible that there is insufficient access to this folder. If a local client machine is having unusual errors or access problems, try providing full control for authenticated users to this folder. You can do so by right-clicking the “SYSPROClient” folders and going to “Properties”.

Check Resource Utilization

SYSPRO utilizes significant system resources during certain business functions and report generation. To ensure that SYSPRO has sufficient resources to perform its tasks, be sure to review local client machine hardware and memory availability on the SYSPRO server itself. If the server is suffering from limited resources, it can result in a system-wide slowdown for all client-machines connected to SYSPRO.

Troubleshooting SYSPRO with EstesGroup Experts

Troubleshooting general SYSPRO 8 instabilities can be a complex and time-consuming process. From managing antivirus and firewall exceptions to monitoring SQL health and system resource utilization, there are numerous factors to consider when diagnosing and resolving these issues.

However, by leveraging the power of private cloud hosting through EstesGroup, you can simplify your SYSPRO deployment and minimize the risk of encountering these instabilities altogether. With our expertise in managing SYSPRO environments and our state-of-the-art private cloud infrastructure, you can focus on running your business while we handle the technical complexities.